AI Friendly Backend

TLDR

- A backend framework that handles data validation makes development easier and more reliable

- Frameworks like that generally require a few extra steps to setup. A bit of process upfront saves you a ton of cognitive overhead later.

- AI can now handle that process. It's virtually zero-cost and you end up with a fully typed, auto-documented backend.

- In short: Use an ORM to pull your db schema into your code. Save prompts as markdown in your codebase. Use the db schema and those prompts to generate the types, functions, and endpoints you need.

Video Demo

Check out this video demo on X to see the workflow in action.

Who This For

This guide is designed for anyone who uses Cursor and in particular:

- Solo developers and small teams building backends for their applications

- Developers new to backend development who want a robust, type-safe setup

- Experienced developers who want inspiration for their own ai-coding workflows

Overview

In this demo, we'll create a fully typed backend server running on Railway that connects to your database using the Prisma ORM. By combining TypeScript, Elysia, and a few other tools in a specific way, we can quickly build a production-ready backend that serves your application. The server will include automatic Swagger documentation generation for API validation and exploration. You can find a complete example of this setup in the demo repository. The workflow we'll cover makes it easy to iterate on your backend - you can make database changes in Supabase, run a few AI-assisted prompts, and have your backend types and endpoints automatically updated to match.

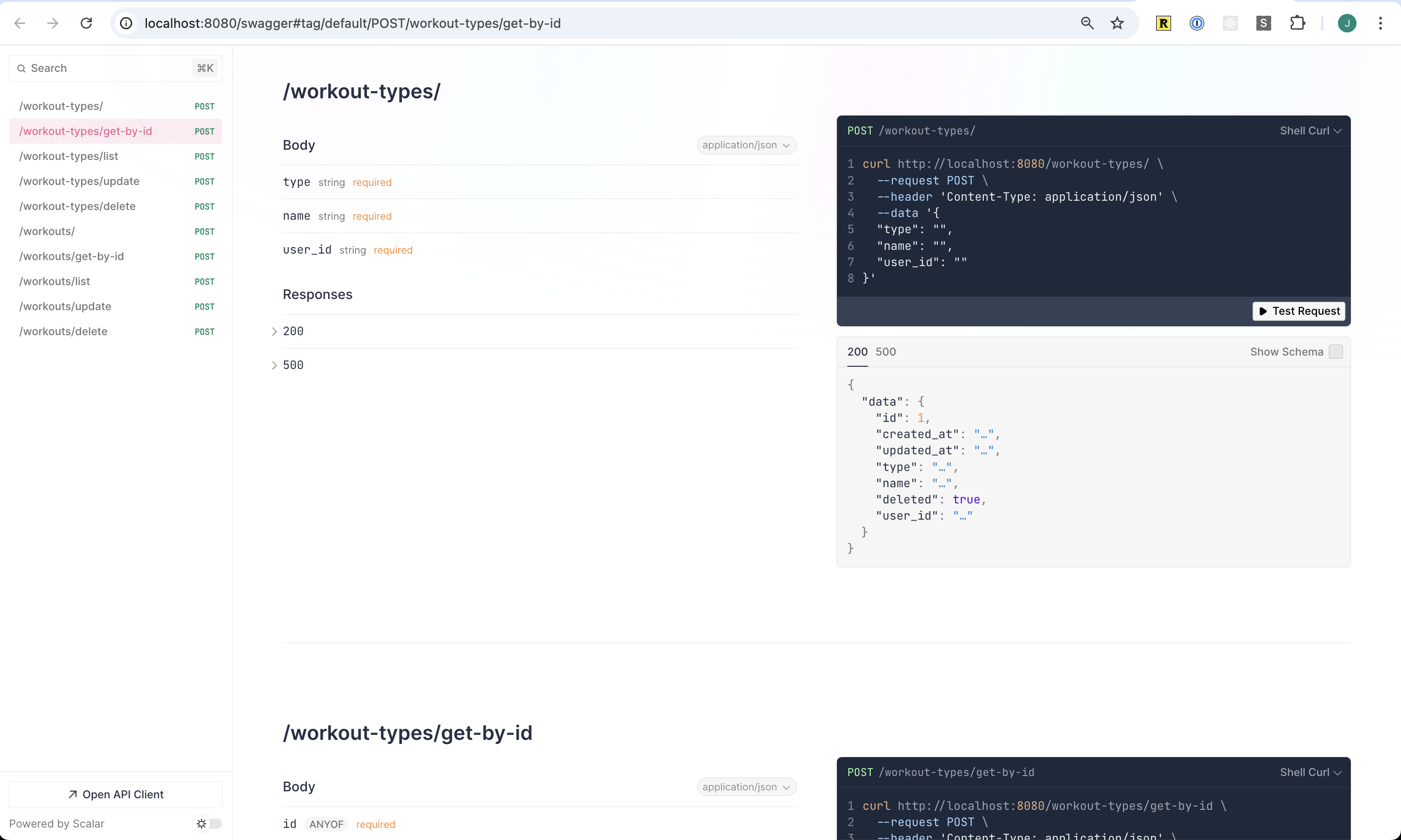

The end result will look something like:

Auto-generated Swagger documentation makes it easy to explore and test your API endpoints.

Sample Repo and Video Demo

I would highly recommend watching the video and reading through the repo. This written guide is more of a supplement to the video is missing a couple of the set up steps. If you need help with that feel free to DM me.

Recipe

The principles of this approach work with any combination of tools. You need:

- Database

- ORM

- Typed backend framework

- Schema validator compatible with your framework

- Deployment platform

- AI IDE like Cursor

For this guide, we'll use:

- Supabase as our database

- Prisma as our ORM

- Elysia as our backend framework

- Typebox for schema validation

- Railway for deployment

Why these specific tools? The honest answer is that I like them and I'm familiar with them.

Project File Structure

Dockerfile- Container configuration for buildingprisma/schema.prisma- Database schema definition

src/endpoints/- API endpoint handlers...- Individual endpoint files

server.ts- Main Alesia applicationtypes.ts- Typebox schemas and type definitionsdb.ts- Database operations and queriesp_db.md- Database-related promptp_endpoints.md- Endpoint-related promptp_types.md- Type-related prompt

Step by Step Process

Pre-reqs:

Not covered here or in the demo, but you'll need:

- Create a Supabase account and project

- Create a Railway account

- Set up Prisma by following these instructions

- The big piece to note is you'll need to add a new

.envfile that contains yourDATABASE_URLandDIRECT_URLconnection strings. - You can find these in Supabase by going clicking Connect and then going to the ORMs tab.

- The big piece to note is you'll need to add a new

- Connect your repo to Railway.

- Adjust the build and deploy settings as you need.

- If you want the backend accessible over the internet, go to "Networking" and create a public domain.

⚠️ This demo does not cover authentication. If you make your backend publicly accessible, anyone who finds your API endpoint can send requests to it. For a production application, you'll want to add authentication and authorization.

The example repo includes a Dockerfile that Railway will use to automatically build and deploy your backend. That Dockerfile should work if you are using Elysia, Bun and Prisma. Note that the build may fail until you have your Prisma schema properly configured.

If you are using a different language/framework/ORM combination, just ask ChatGPT / Claude to create a Dockerfile for you.

1. Edit Your Database Schema

You can manually edit your database schema through the Supabase UI - this works great for solo developers and small teams. As your project grows more sophisticated, you may want to use your ORM's migration features instead, though that's not covered in this guide.

If you're new to database design, check out database.build created by Supabase and the ElectricSQL team. It can help you create a new database design.

2. Pull Your Database Schema Into Your Codebase

One of the key benefits of using an ORM is its ability to perform introspection on your database schema and pull that schema directly into your codebase. This creates a bridge between your database and your code that the AI tools can easily understand and work with.

With Prisma, after making changes to your database, you'll need to run two commands:

bunx prisma db pull - This performs the introspection and updates your local prisma.schema file

bunx prisma generate - This generates the Prisma client

You can combine these into a single command:

bunx prisma db pull && bunx prisma generate

If you're still seeing type errors in your IDE after running these commands, you may need to restart your TypeScript server.

2. Update types.ts

The next step is to update your Typebox schemas and inferred types. This is where it gets fun! Here's how you do it:

- Select the entire

types.tsfile - Use

CMD+Kin Cursor to pull up the inline editor - @-Reference your

prisma.schemaandp_schemas_types.mdfiles - Add a message like "I've updated my prisma schema with table name. Please update the schemas and types here"

- Let the AI assistant update the file for you

For each table in our database, we create three schemas:

CreateItemSchema: Contains fields required to create a new object. Excludes auto-generated fields likeidorcreated_at.UpdateItemSchema: Contains fields that can be updated. All fields are optional - the db update function will only modify included fields.ItemSchema: The complete definition of the item with all fields.

These schemas serve two purposes:

- Elysia uses them to validate incoming and outgoing API data

- We infer TypeScript types from them to use throughout the codebase

3. Update Database Functions

Next, we'll update our database functions using the exact same flow:

- Select the entire

db.tsfile or put your cursor at the bottom of the file if your file is already pretty long. - Use

CMD+Kto open the inline editor - @-Reference:

p_db.mdtypes.tsschema.prisma

- Describe your schema changes and let the AI update the functions

The database functions we create are lightweight wrappers around the ORM capabilities. While we could use the ORM functions directly in endpoints or even use Supabase APIs, these wrapper functions offer advantages:

- They're typed with our Typebox schemas

- They reduce cognitive overhead for future development

- They provide a consistent interface for database operations

This extra layer of abstraction might seem like unnecessary process, but since AI handles the implementation details, we get the benefits of clean architecture without the maintenance burden. And it plays really nicely with the rest of our prompting.

4. Create and Update Endpoints

Time to create our API endpoints using the same AI-assisted workflow:

- Create a new file in your endpoints folder. Keeping the code modular like this makes it easier to prompt against an entire file.

- Use

CMD+Kto open the inline editor - @-Reference these files for context:

p_endpoints.tsdb.tstypes.ts

- Describe your schema changes and let the AI update the endpoints

- Make sure you add your new routes to the Elysia app in

server.ts

The AI will create standardized endpoints that follow your schema changes and integrate with your database functions.

5. Validate and Deploy

Once you've made your changes:

- Restart your development server (or let it hot refresh)

- Navigate to

/swaggerin your browser - Review your newly created routes in the Swagger UI

- Test the endpoints directly using the interactive documentation

- Commit your changes and push to your repository

- Railway will automatically detect the changes and deploy your updated backend

Preemptive Nitpicks

This process definitely isn't the most efficient or specifically how your "supposed" to do some of this. All of the db.ts is a thin wrapper around ORM and Supabase capabilities. The types.ts ends up duplicating a bit of work that the ORM should reasonably do. All of the endpoints are POST requests. Obviously the /get-by-id endpoint “should” be a GET request to /item and /update-item “should” be a patch request.

If your inclined to those definitely adjust the process and prompts to fit. The reason I prefer it this way is that it really simplifies the prompting for creating the frontend!

It's all post requests and everything follows the same structure for the body of the request. You can use the same types.ts in the frontend and your set.